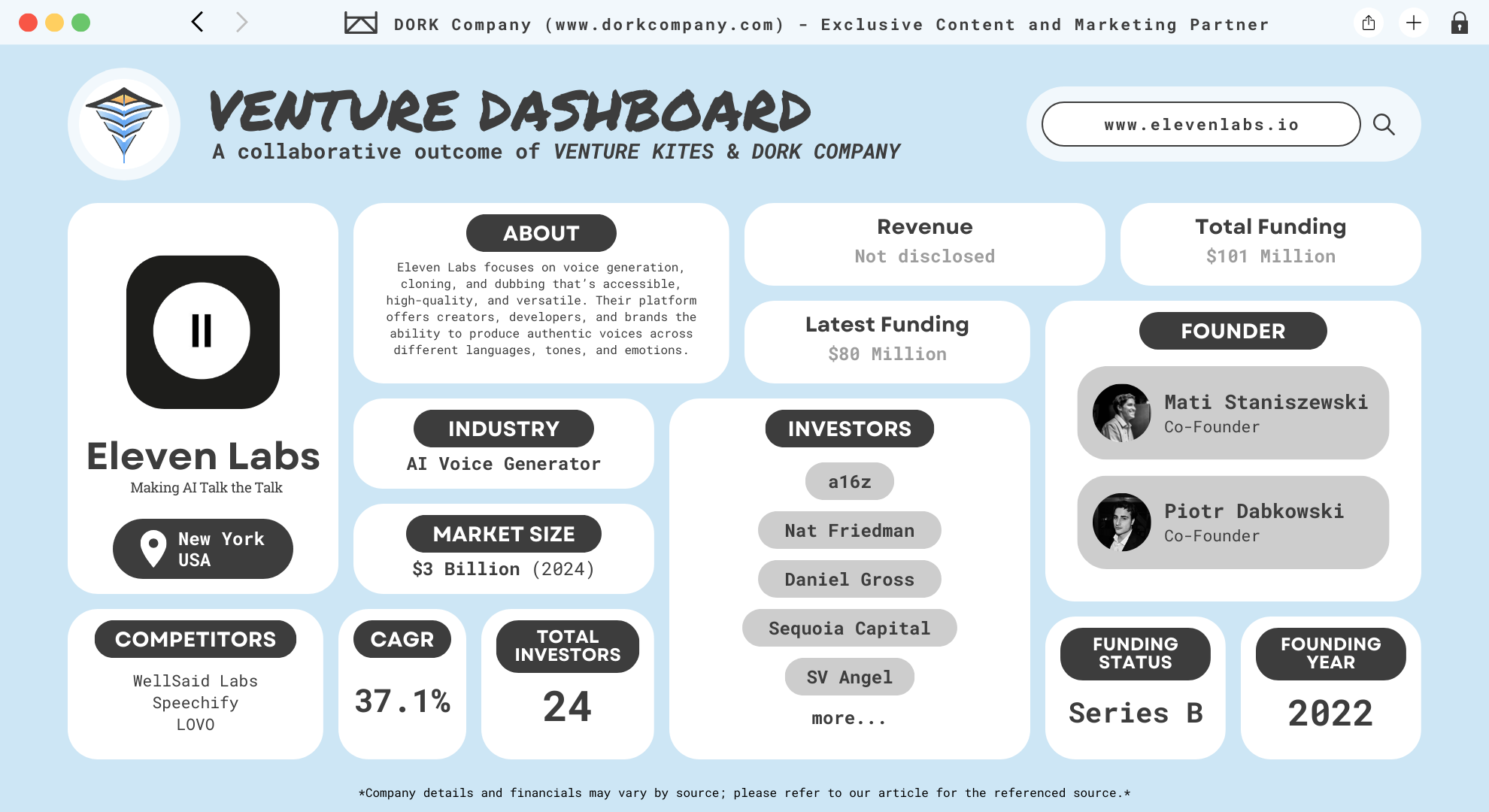

Eleven Labs : This Unicorn is Making AI Talk the Talk

Can AI make voices sound more real than reality? Eleven Labs thinks so, and they’re changing the game with voices that feel truly human. Founded in 2022 by Mati Staniszewski and Piotr Dabkowski, Eleven Labs emerged from a vision to transform AI audio content. Inspired by a shared frustration with subpar film dubbing, these childhood friends created a platform where text truly “speaks”. (Eleven Labs)

Based in New York, Eleven Labs focuses on voice generation, cloning, and dubbing that’s accessible, high-quality, and versatile. Their platform offers creators, developers, and brands the ability to produce authentic voices across different languages, tones, and emotions. This flexibility has attracted a wide user base, from podcasters and authors to video game designers and educators who aim to bring text-based content to life.

Eleven Labs’ services span more than entertainment—they provide solutions in industries like corporate training, customer service, and accessibility, where lifelike voice interactions can enhance user experience. From creating voices tailored to audiobooks to generating automated call center voices, Eleven Labs is pioneering a future where AI-generated voices are as impactful as those of real human speakers.

Sound Decisions: The Brains Behind the Voices

Founders Mati Staniszewski and Piotr Dabkowski met in Poland as kids, sharing a passion for technology and a knack for problem-solving. They took inspiration from one quirky, frustrating experience: watching poorly dubbed American movies in Poland and wanted to eliminate language barriers in a way that feels natural. Their background in technology and AI provided the tools to make this vision a reality. Fast-forward to 2022, they launched Eleven Labs to improve on the robotic tones typical in AI voices and AI assisted dubbing.

Mati Staniszewski, Eleven Labs’ CEO, brings a stellar academic and professional background. He holds a First-Class Honours degree in Mathematics from Imperial College London and has experience at powerhouse companies like Opera, Palantir and BlackRock. At Palantir, he was a Deployment Strategist, focusing on data solutions, while at BlackRock, he worked in product development. (Mati Staniszewski)

Piotr Dabkowski, the CTO, adds significant machine learning expertise to the company. He earned a Master’s in Advanced Computer Science from the University of Cambridge and an undergraduate degree in Engineering from the University of Oxford, both with distinction. Before Eleven Labs, Piotr worked as a software engineer at Google, Opera and Tessian, where he contributed to cutting-edge AI projects. He also has a deep commitment to research; he published a notable paper on image saliency at NeurIPS, one of the top conferences in AI. (Piotr Dabkowski)

Talk of the Town: The Booming AI Voice Market

The AI voice generator market is soaring, with projections showing a leap from $3 billion in 2024 to a remarkable $20.4 billion by 2030. This rapid growth, driven by a 37.1% compound annual growth rate (CAGR), is fueled by rising demand across multiple industries like media, customer service, and e-commerce. Companies are leveraging AI voices for high-quality, engaging, and scalable content across languages and regions, enabling them to reach a global audience more effectively. (MarketsandMarkets)

A major contributor to this surge is the demand for Text-to-Speech (TTS) and voice cloning technologies. TTS dominates the market, capturing around 70.5% of the share, largely due to its diverse applications in customer service, education, and accessibility. Voice cloning is gaining momentum as well, offering a personalized touch in marketing, entertainment, and accessibility solutions. Advanced machine learning enables AI-generated voices that sound increasingly realistic, enhancing experiences from virtual assistants to interactive media. (Market Research Future)

Regionally, North America leads the pack, holding over 37.9% of the market share. Home to industry giants like Google, Amazon, and Microsoft, the region benefits from early adoption and substantial investments in AI research. Europe follows with growth in automotive AI voice applications, while Asia Pacific is set to expand the fastest due to the rising popularity of smart devices and IoT solutions.

Cloud-based deployment is the preferred choice, accounting for 74.1% of market applications. Its scalability, cost-effectiveness, and accessibility make it ideal for businesses and consumers alike. This trend underscores the role of cloud technology in advancing AI voice solutions, especially for customer service, where businesses aim to automate and personalize user interactions more seamlessly.

Making Sound Waves: The Mission Behind Eleven Labs

Imagine a world where every piece of content is accessible in any language, without losing its emotional touch. That’s Eleven Labs’ vision: breaking down language barriers through advanced voice AI technology. Founded in 2022, Eleven Labs aims to make global communication seamless by providing high-quality, natural-sounding AI voice solutions. Their mission is to enhanc content accessibility, allowing creators and businesses to reach diverse audiences with realistic voice dubbing and language adaptability.

Problems They Solve

The primary problem Eleven Labs tackles is the lack of accessible, high-quality voice solutions for content in multiple languages. Traditional text-to-speech solutions often sound robotic and fail to maintain the nuances of the original voice. Eleven Labs uses voice cloning to preserve these characteristics, allowing creators to reach a broader audience while retaining emotional depth and naturalness. Additionally, Eleven Labs addresses accessibility issues, providing tools that help people with disabilities communicate more effectively, such as AI voices for those with speech impairments.

Business Model

Eleven Labs operates on a flexible business model, offering subscriptions for individual creators and custom solutions for enterprises. They provide a comprehensive suite of AI tools for dubbing, narration, and multilingual support, with scalability for businesses of all sizes. Their innovative approach has quickly drawn major investments, leading to a unicorn valuation within two years. This model allows Eleven Labs to serve diverse industries—media, education, customer service, and more—by integrating AI voice technology into existing workflows to boost efficiency and expand reach.

Tools of the Trade: A Symphony of AI Voices

Eleven Labs delivers a comprehensive suite of AI-driven voice tools that redefine digital audio. From simple text-to-speech to sophisticated voice cloning, their product line allows creators, enterprises, and developers to bring content to life in any voice or language, with exceptional realism and adaptability.

1. Text-to-Speech (TTS)

Eleven Labs’ TTS feature enables text-to-audio transformation in 29 languages, making it ideal for a wide range of applications, from audiobooks to interactive media. The AI produces human-like inflection and emotion, bringing any text to life with natural-sounding voices.

2. Speech-to-Speech

This tool allows users to transform and adapt an existing voice recording. By maintaining the original emotion and tone, the Speech-to-Speech feature enables creators to repurpose audio without re-recording, which is useful for dubbing or adjusting pre-recorded content.

3. Text to Sound Effects

For creators looking to add dimension to their content, this feature translates text into matching sound effects, making it a valuable addition for interactive content, games, and media.

4. Voice Cloning

Eleven Labs offers two levels of voice cloning. The Instant Voice Cloning (IVC) quickly replicates voices from short audio samples, while the Professional Voice Cloning (PVC) builds a high-fidelity digital voice clone from longer recordings. This advanced cloning maintains tone and inflection, making it suitable for personalized user experiences while enforcing strict security protocols to protect user rights.

5. Voice Isolator

The Voice Isolator allows users to separate voice from background noise, ensuring that voices remain clear and distinct. This tool is perfect for high-quality dubbing, voiceovers, or any content requiring refined audio clarity.

6. Projects

Focused on long-form audio, this feature enables creators to edit and manage complex audio projects, including audiobooks, podcasting, and lengthy video content. With Projects, users can produce and fine-tune audio over extended durations, creating professional-grade outputs.

7. Dubbing Studio

With support across 29 languages, the Dubbing Studio automates localization for diverse audiences. It maintains the original voice’s tone and emotion, allowing creators to adapt content to new languages with ease. This feature is widely adopted in media and entertainment for international releases.

8. Voiceover Studio

Voiceover Studio is tailored for social media and commercial needs, enabling creators to produce captivating voiceovers quickly. From ad scripts to narration, this tool is optimized for short-form, high-impact audio content.

9. Voice Library

Eleven Labs’ Voice Library offers a collection of voices that can be accessed and used by the community. Users can share unique voices generated with the platform, expanding their creativity and rewarding them for sharing their creations.

10. ElevenReader

A mobile-friendly option, ElevenReader lets users listen to text-based content on the go. It transforms articles, scripts, and more into an audio format, providing convenient access to written material anywhere.

How Eleven Labs Works: The Tech Behind the Talk

The company’s technology, built on deep learning and synthetic voice generation, prioritizes both innovation and realism. Let’s look at the key technological pillars driving their success.

1. Natural Language Processing (NLP)

NLP breaks down text input into a form that the system can understand and process for speech synthesis. It involves several steps:

- Text Preprocessing: This includes converting text into phonemes, which are the sounds that make up words. The system also handles punctuation, abbreviations, and numbers by interpreting their meanings for accurate pronunciation.

- Prosody Analysis: Prosody involves rhythm, stress, and intonation patterns in speech. TTS systems analyze these elements to ensure that the output sounds natural and emphasizes the right syllables and words.

2. Speech Synthesis Models

Two primary methods have driven TTS advancements:

- Concatenative Synthesis: In traditional TTS, recorded human voice segments are “concatenated” or pieced together to form words and sentences. While this can create natural-sounding speech, it is limited by the recordings and struggles with flexibility and scalability.

- Neural Network-Based Synthesis (e.g., WaveNet, Tacotron): Neural networks revolutionized TTS with models that generate speech from scratch by learning speech patterns from large datasets. For example, Google’s WaveNet and Tacotron use deep learning to produce highly realistic, flexible, and natural-sounding speech that can mimic human intonation and pronunciation with minimal pre-recorded segments.

3. Voice Cloning and Emotional Synthesis

Modern TTS systems also incorporate voice cloning and emotional synthesis. Voice cloning uses deep learning to mimic specific voices from a small sample, while emotional synthesis adjusts the TTS output to convey emotions like joy, sadness, or anger. This layer adds realism and user personalization, as seen in platforms like Eleven Labs and Google’s TTS, enhancing engagement and making interactions feel more human-like.

4. Real-Time Processing and API Integration

High-performance TTS models process speech in real-time, ideal for interactive applications such as voice assistants and automated customer service. APIs like those provided by Amazon Polly, Eleven Labs, and Google TTS enable developers to incorporate TTS capabilities into their applications seamlessly. These APIs allow custom voice settings, language choices, and latency optimizations, essential for dynamic use cases in customer support and accessibility.

Sound Investment: Eleven Labs’ Rise to the Top

Eleven Labs has skyrocketed to the top of the AI voice technology market, achieving unicorn status with a $1.1 billion valuation.

TIME Magazine

One standout partnership is with TIME Magazine, which uses Eleven Labs’ “Audio Native” technology to add automated voiceovers to select articles on TIME.com. (TIME Magazine)

Storytel

Eleven Labs has also partnered with Storytel, a leading audiobook platform, to develop the VoiceSwitcher feature, allowing listeners to choose between AI and human narrations. This feature, launched in select markets, is set to expand, making it easier for Storytel users to personalize their audiobook experience. (Storytel)

D-ID

In the field of AI-generated videos, Eleven Labs joined forces with D-ID, a company specializing in creative AI video tools. Together, they enable users to create videos featuring digital avatars with realistic voices from Eleven Labs. (D-ID)

Audacy

Eleven Labs’ collaboration with Audacy, a major audio content provider, enhances Audacy’s programming with synthetic voices that add a personalized touch for listeners. (Audacy)

Their partnerships with organizations like The Washington Post have enabled a new wave of AI-generated audio content, enhancing accessibility and content reach across languages. In gaming, Eleven Labs collaborates with companies like Paradox Interactive, providing immersive character voices and real-time dubbing for global audiences.

Beyond commercial success, Eleven Labs is committed to social impact. Their Impact Program aims to empower one million voices by offering free voice-cloning services to ALS and MND patients. In collaboration with Bridging Voice and The Scott-Morgan Foundation, this initiative helps individuals with speech impairments reclaim their voices, fostering dignity and human connection. A notable success story includes former NFL player Tim Green, who, with Eleven Labs’ technology, recreated his voice for his podcast despite losing his ability to speak due to ALS. (Impact Program)

From pre-seed to Series B : Eleven Labs’ Path to Unicorn Status

Eleven Labs has achieved remarkable growth through three funding rounds, raising a total of $101 million in just one year. Their innovative voice AI technology attracted prominent investors, rapidly boosting the company’s valuation to unicorn status.

1. Seed Round – January 25, 2023

The journey began with a $2 million seed round led by Credo Ventures and Concept Ventures. This initial funding gave Eleven Labs the resources to build out its core voice technology, allowing them to establish their platform and attract early customers. Angel investor Peter Czaban also joined this round, helping lay the groundwork for Eleven Labs’ rise in the voice AI market.

2. Series A – May 17, 2023

In May 2023, Eleven Labs secured $19 million in a Series A round, which elevated its valuation to $100 million. This round drew attention from top-tier institutional investors, including Andreessen Horowitz (a16z), Creator Ventures, and SV Angel. Storytel, TheSoul Publishing, and Embark Studios also joined as corporate investors, solidifying partnerships that would later influence Eleven Labs’ strategic collaborations. Additionally, a number of angel investors—such as Nat Friedman, Daniel Gross, Mike Krieger, Brendan Iribe, and Mustafa Suleyman—helped strengthen the company’s industry reach and supported its mission to expand globally.

3. Series B – January 22, 2024

The latest Series B round in January 2024 raised a substantial $80 million, boosting Eleven Labs to unicorn status with a $1 billion valuation. Andreessen Horowitz, Sequoia Capital, Smash Capital, SV Angel, BroadLight Capital, and Credo Ventures led the funding, with continued support from angels like Nat Friedman and Daniel Gross. This substantial investment reflects investor confidence in Eleven Labs’ ability to pioneer voice AI technology, positioning them as a leader in the field.

In total, Eleven Labs has raised $101 million, with its largest funding round of $80 million helping it reach a $1 billion valuation as of January 2024.

Speak Your Mind: Eleven Labs and the Future of Voice AI

As Eleven Labs continues to develop advanced voice solutions, they are shaping a future where voice AI becomes an essential part of media, communication, and personal empowerment. Their journey showcases how cutting-edge technology, when used ethically and purposefully, can bridge communication gaps and make a lasting difference in the world.

Eleven Labs specializes in creating advanced voice synthesis solutions that bridge communication gaps and enhance digital media experiences. The company develops AI tools for text-to-speech, voice cloning, multilingual dubbing, and speech-to-speech conversion, allowing creators to produce lifelike voices across languages. Their technology replicates the intricacies of human speech, enabling applications in fields like audiobooks, entertainment, and personalized customer service.

If you’ve got a groundbreaking idea, now’s the time to act. Tools like Eleven Labs prove that innovation in AI is just getting started. Take that first step—transform your creative ideas into reality. And while you’re at it, check out other Venture Kites articles to stay inspired by the latest in tech innovation.

At a Glance with DORK Company

Dive In with Venture Kites

Lessons From Eleven Labs

Identify and Solve Real Needs

Lesson & Why it Matters: Start with a clear, unmet need in the market. Solving genuine problems helps a company quickly find traction and resonate with users.

How to Implement: Conduct deep research on pain points in your industry. Engage with potential users to understand gaps that existing products don’t address.

Example from Eleven Labs: Their founders built Eleven Labs to address poor-quality dubbing by offering lifelike voice technology, meeting a tangible need in entertainment, education, and accessibility.

Adopt Responsible AI Practices Early On

Lesson & Why it Matters: Ethical practices are critical, especially in emerging technologies like AI. A proactive approach to ethical AI builds trust and credibility.

How to Implement: Introduce security measures, seek user consent for data usage, and set up guidelines to prevent misuse of technology.

Example from Eleven Labs: They enforce strict ethical standards, using voice verification and voice-data control to protect user identities.

Maintain a Lean and Agile Approach Early On

Lesson & Why it Matters: Lean structures help startups focus resources on core objectives without overextending financially.

How to Implement: Start with a small, skilled team focused on high-impact tasks and outsource where possible to reduce overhead.

Example from Eleven Labs: Initially, they operated with a small team, focusing on developing one feature at a time, which ensured quality and efficiency.

Continuously Innovate to Stay Competitive

Lesson & Why it Matters: Constant innovation keeps a startup relevant and helps retain user interest, even in a crowded market.

How to Implement: Regularly update products based on user feedback and emerging industry trends.

Example from Eleven Labs: They continually add features, like their multilingual support and real-time voice adjustments, staying ahead in voice tech.

Focus on User Experience and Accessibility

Lesson & Why it Matters: A product that’s easy to use and accessible widens its appeal, retaining users and attracting new ones across demographics.

How to Implement: Invest in UI/UX design, conduct user testing, and add features that enhance accessibility.

Example from Eleven Labs: Their Audio Native feature allows users to access content audibly, making it accessible for visually impaired users and busy readers.

Youtube Shorts

Author Details

Creative Head – Mrs. Shemi K Kandoth

Content By Dork Company

Art By Dork Company

Instagram Feed

X (Twitter) Feed

🚀 Introducing @elevenlabsio : Redefining the Future of AI Voices! 🗣️🎙️

— Venture Kites (@VentureKites) November 8, 2024

Dive into how this innovative platform is transforming digital content with lifelike AI voice tech, powered by co-founders Mati Staniszewski and Piotr Dabkowski 👇👇#AIVoice #VoiceTech #StartupJourney