Deepseek: The $6 Million AI, Giving $100M Models a Run for Their Money

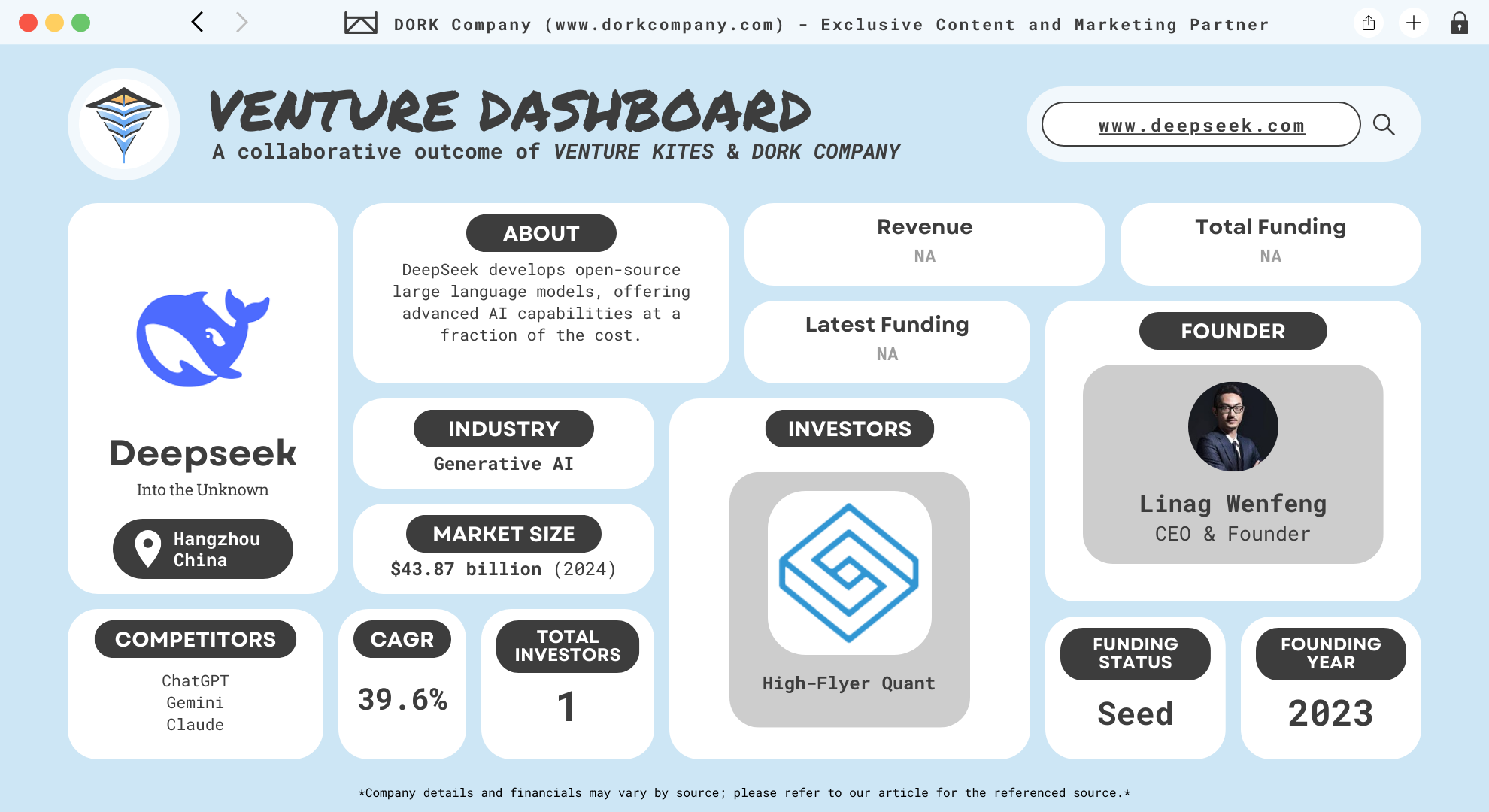

DeepSeek is a Chinese artificial intelligence (AI) company that develops open-source large language models. Founded by Liang Wenfeng in July 2023, the company is based in Hangzhou, Zhejiang, China. Liang, who also co-founded the hedge fund High-Flyer, serves as DeepSeek’s CEO. High-Flyer owns and funds DeepSeek. (Deepseek)

They create AI models that are both efficient and cost-effective. Their flagship model, DeepSeek-R1, delivers performance comparable to leading AI models like OpenAI’s GPT-4. Notably, DeepSeek-R1 was trained at a significantly lower cost—approximately $6 million compared to the $100 million spent on GPT-4. This efficiency is achieved using about 2,000 GPUs, a fraction of the resources used by other major AI companies.

In January 2025, DeepSeek released its first free chatbot app for iOS and Android, based on the DeepSeek-R1 model. By the end of that month, the app had surpassed ChatGPT as the most-downloaded free app on the iOS App Store in the United States. This rapid success led to significant market reactions, including an 18% drop in Nvidia’s share price.

Their open-source approach allows its AI models and training details to be freely available for use and modification. However, reports indicate that the API version hosted in China applies content restrictions in accordance with local regulations, limiting responses on certain topics.

The company’s innovative methods and efficient use of resources have positioned it as a significant player in the AI industry, challenging larger and more established rivals. Their rise has been described as “upending AI” and ushering in “a new era of AI brinkmanship.”

Math Meets Machines: The Genius Behind DeepSeek

Liang Wenfeng, born in 1985 in Guangdong, China, is the founder and CEO of DeepSeek, an AI company established in 2023. He demonstrated exceptional mathematical abilities from a young age, mastering high school concepts during middle school and advancing to university-level material early on. Liang pursued electronic and communication engineering at Zhejiang University, where he developed a keen interest in artificial intelligence (AI) and its applications. (Liang Wenfeng)

After completing his education, Liang co-founded Hangzhou Yakebi Investment Management Co., Ltd. in 2013, aiming to integrate AI with quantitative trading. In 2015, he co-founded Hangzhou Huanfang Technology Co., Ltd., which later became Zhejiang Jiuzhang Asset Management Co., Ltd. These ventures focused on leveraging AI algorithms to enhance financial trading strategies.

In February 2016, Liang, along with two engineering classmates, established Ningbo High-Flyer Quantitative Investment Management Partnership. This hedge fund relied on mathematics and AI to make investment decisions, eliminating human intervention in the trading process. By 2019, High-Flyer had over 10 billion yuan in assets under management and was exclusively using AI in trading, often utilizing Nvidia chips.

Liang’s fascination with AI extended beyond finance. In 2021, while running High-Flyer, he began acquiring thousands of Nvidia GPUs for an AI side project. This move laid the foundation for DeepSeek, which he launched in May 2023. DeepSeek focuses on developing open-source large language models, aiming to make advanced AI technologies more accessible. The company operates as an artificial general intelligence lab, separate from High-Flyer’s financial business, and is fully funded by High-Flyer.

Liang’s journey from a math prodigy in Guangdong to a prominent figure in AI showcases his dedication to innovation and his belief in the transformative potential of artificial intelligence. His work with DeepSeek reflects a commitment to advancing AI research and making its benefits widely available.

A Generative Boom: AI’s Wild West and DeepSeek’s Gold Rush

The generative AI market is experiencing rapid growth, driven by advancements in artificial intelligence technologies. In 2024, the market was valued at approximately $43.87 billion and is projected to reach $967.65 billion by 2032, reflecting a compound annual growth rate (CAGR) of 39.6% during this period. (Fortune Business Insights)

The surge in demand for generative AI applications spans various sectors, including healthcare, finance, and entertainment. Factors such as the adoption of technologies like super-resolution, text-to-image conversion, and text-to-video conversion are significant contributors to this growth. Additionally, there’s an increasing need to modernize workflows across industries, further propelling the market’s expansion.

In terms of regional growth, North America held a dominant position in 2024, accounting for 41% of the market share. This dominance is attributed to the early adoption of AI technologies and substantial investments in research and development. Looking ahead, the Asia-Pacific region is expected to experience the fastest growth, driven by rapid digitalization, strong government support, and a flourishing startup ecosystem. (Precedence Research)

Despite the optimistic outlook, some experts express concerns about a potential bubble in the generative AI sector. The substantial investments pouring into the industry may not yet align with the actual revenues generated, leading to caution among investors and stakeholders.

Deep-ly Committed: The Vision That’s Changing AI

They envision a future where AI technologies are accessible, efficient, and capable of addressing complex global challenges. By focusing on open-source models, the company aims to democratize AI, allowing researchers, developers, and organizations worldwide to benefit from and contribute to AI advancements.

The company addresses several key problems in the AI industry. Firstly, it tackles the high costs associated with developing and deploying large language models by creating efficient models that require fewer computational resources. Secondly, DeepSeek promotes transparency and collaboration in AI development through its open-source approach, enabling a broader community to engage with and improve upon its technologies. Lastly, the company focuses on enhancing the reasoning capabilities of AI models, making them more effective in understanding and generating human-like text.

They operate on a business model that emphasizes open-source development and collaboration. By releasing its AI models and codebases to the public, the company fosters a community-driven approach to innovation. This strategy not only accelerates the improvement of AI technologies but also positions DeepSeek as a leader in the AI community. While the company has not detailed specific commercialization plans, its focus on research and open-source contributions allows it to navigate regulatory environments effectively and avoid stringent provisions that apply to consumer-facing technologies.

Model Citizen: DeepSeek’s Powerful AI Toolbox

DeepSeek has developed a series of advanced AI models, each designed to address specific challenges in natural language processing, coding, mathematics, and multimodal understanding.

DeepSeek-R1

DeepSeek-R1 is their inaugural open-source large language model, introduced in 2023. Despite being trained with a budget of less than $6 million and utilizing approximately 2,000 GPUs, DeepSeek-R1 delivers performance comparable to leading models like OpenAI’s GPT-4. The model employs reinforcement learning techniques to enhance its reasoning capabilities, allowing it to generate accurate and contextually relevant responses. This efficiency in training and operation has made DeepSeek-R1 a notable achievement in the AI community.

DeepSeek-V2

Released in May 2024, DeepSeek-V2 represents a significant advancement in AI model architecture. It comprises 236 billion parameters, with 21 billion activated per token, and supports a context length of up to 128,000 tokens. The model introduces innovative architectures, including Multi-head Latent Attention (MLA) and DeepSeekMoE. MLA compresses the Key-Value (KV) cache into a latent vector, enhancing inference efficiency, while DeepSeekMoE enables economical training through sparse computation. These innovations result in a 42.5% reduction in training costs and a 93.3% decrease in KV cache size compared to previous models.

DeepSeek-V2-Lite

Alongside DeepSeek-V2, DeepSeek released a lighter version known as DeepSeek-V2-Lite. This model contains 15.7 billion parameters, with 2.4 billion activated per token, and a context length of 32,000 tokens. It shares the same architectural innovations as its larger counterpart, including MLA and DeepSeekMoE, but is designed for applications requiring lower computational resources. Despite its reduced size, DeepSeek-V2-Lite maintains strong performance in various language understanding tasks.

DeepSeek-V3

In December 2024, DeepSeek unveiled DeepSeek-V3, further building upon the advancements of its predecessors. This model consists of 671 billion parameters, with 37 billion activated per token, and supports a context length of up to 128,000 tokens. DeepSeek-V3 incorporates multi-token prediction, allowing for faster decoding of multiple tokens simultaneously. The model was pre-trained on 14.8 trillion tokens from a multilingual corpus, with a higher ratio of math and programming content, enhancing its capabilities in complex reasoning tasks.

DeepSeek-Coder

DeepSeek-Coder is a model specifically designed to assist with coding tasks. It offers features such as code autocompletion, debugging assistance, and code generation across various programming languages. The model enhances developer productivity by understanding coding context and providing relevant suggestions, thereby reducing development time and effort.

DeepSeek-Math

Tailored for mathematical problem-solving, DeepSeek-Math is specialized in handling complex mathematical tasks. It assists in solving equations, performing calculations, and providing step-by-step explanations for various mathematical problems. This model is particularly useful for educational purposes, research, and applications requiring advanced mathematical reasoning.

DeepSeek-VL

Expanding into multimodal AI, DeepSeek introduced DeepSeek-VL, a vision-language model capable of understanding and processing both textual and visual information. This model excels in tasks that require the integration of visual context with language understanding, such as image captioning, visual question answering, and interpreting complex visual data. DeepSeek-VL is valuable in applications like digital content creation and accessibility tools.

Mixing It Up: How DeepSeek’s Mixture-of-Experts Model is a Game-Changer

DeepSeek-V3 is a state-of-the-art Mixture-of-Experts (MoE) language model designed for superior performance, efficient inference, and cost-effective training. It features 671 billion total parameters, with 37 billion activated per token, enabling it to deliver high-quality outputs across diverse natural language processing (NLP) tasks. It builds upon the previous DeepSeek-V2 model by introducing innovative load balancing strategies, multi-token prediction, and low-precision training.

Innovative Model Architecture

DeepSeek-V3 uses a Transformer-based architecture with several cutting-edge modifications to enhance efficiency:

- Multi-Head Latent Attention (MLA): Compresses key-value (KV) cache into a latent vector, reducing memory usage and improving inference speed.

- DeepSeekMoE (Mixture of Experts): Uses auxiliary-loss-free load balancing to efficiently distribute computation across multiple experts while minimizing performance degradation.

- Multi-Token Prediction (MTP): Allows the model to predict multiple tokens at once, increasing throughput and training efficiency.

These features make DeepSeek-V3 one of the most efficient MoE models available.

Training and Compute Efficiency

DeepSeek-V3 is trained on 14.8 trillion high-quality tokens, ensuring a diverse knowledge base and robust understanding of various topics. Training follows a three-stage approach:

- Pre-Training: The model learns fundamental language patterns from extensive datasets.

- Supervised Fine-Tuning (SFT): Enhances the model’s ability to generate human-like responses.

- Reinforcement Learning (RL): Further refines performance using reward-based optimization techniques.

The model was trained in just 2.788 million GPU hours using 2048 NVIDIA H800 GPUs—a remarkably low cost of $5.576 million compared to the massive expenditures of other AI labs.

Performance Benchmarks

DeepSeek-V3 demonstrates best-in-class performance across multiple NLP and coding benchmarks:

- MMLU (General Knowledge): 88.5% accuracy, outperforming all other open-source models.

- Mathematical Reasoning (MATH-500): 90.2%, ranking among the top AI models.

- Coding (Codeforces Competitions): Achieves a high percentile score, excelling in competitive programming.

- Factual Knowledge (GPQA-Diamond): Surpasses open-source competitors while rivaling GPT-4o and Claude 3.5 Sonnet.

These results confirm that DeepSeek-V3 is not just another large model but a top-performing AI with superior reasoning, math, and coding abilities.

Low-Precision Training and Memory Optimization

DeepSeek-V3 uses FP8 mixed-precision training, a significant breakthrough that reduces memory consumption while maintaining accuracy. Some key aspects include:

- Fine-grained quantization: Prevents numerical instability by optimizing precision at different model layers.

- FP8 GEMM operations: Improve matrix multiplication efficiency.

- Low-precision optimizer states: Uses BF16 for optimizer tracking, further reducing storage needs.

These optimizations allow DeepSeek-V3 to achieve exceptional performance at a fraction of the cost of similar-sized models.

Inference and Deployment Strategy

DeepSeek-V3 is designed for real-world scalability, with separate strategies for prefilling and decoding:

- Prefilling Stage: Uses Tensor Parallelism (TP4) and Expert Parallelism (EP32) to efficiently load data across GPUs.

- Decoding Stage: Implements InfiniBand-based communication for real-time processing, reducing latency.

With these strategies, DeepSeek-V3 delivers high throughput and fast response times, making it ideal for deployment in large-scale AI applications.

Bedrock to Boardrooms: Who’s Teaming Up with DeepSeek?

The release of DeepSeek’s AI model led to a substantial impact on global markets. On January 27, 2025, major U.S. technology stocks experienced a sharp decline, with companies like Nvidia, Microsoft, and Alphabet witnessing significant drops in their stock prices. This market reaction underscored the perceived threat DeepSeek posed to existing AI giants. (Financial Times)

Their AI model has been lauded for its efficiency, achieving performance levels comparable to top-tier models from Silicon Valley while utilizing fewer resources. This accomplishment has been described as a “Sputnik moment” for American AI, highlighting China’s rapid advancements in the field. (The Atlantic)

The company’s success has also prompted discussions about the future of AI development and competition. Analysts suggest that their approach could democratize AI access, compelling U.S. firms to reduce prices and enhance their models to maintain competitiveness. Despite concerns regarding data usage and potential censorship, Their cost-effectiveness and performance have attracted early adopters, indicating a potential shift in the AI industry. Their AI Assistant app quickly became the top-rated free app on the U.S. Apple App Store by January 27, 2025, surpassing established competitors like ChatGPT.

A notable collaboration is with Amazon Web Services (AWS), which has integrated their R1 AI model into its platforms, including Amazon Bedrock and SageMaker. This integration allows developers to access and deploy their models efficiently, promoting wider adoption of their technology. (CRN)

In Europe, DeepSeek’s AI models have been adopted by companies such as Novo AI, a German startup that transitioned from using OpenAI’s ChatGPT to DeepSeek due to its cost-effectiveness and ease of integration. (Reuters)

Six Million Dollar Model: How DeepSeek is Building AI on a Budget

DeepSeek, founded in 2023 by Liang Wenfeng, operates as a privately funded artificial intelligence (AI) company without external investors. The company is primarily financed by High-Flyer, a quantitative hedge fund co-founded by Liang in 2015. High-Flyer has built a substantial portfolio using AI-driven trading algorithms, managing assets estimated at around $13.79 billion. (Reuters)

In January 2025, DeepSeek released its AI model, developed over two months at a cost of under $6 million. This model was trained using approximately 2,000 Nvidia H800 GPUs, highlighting their cost-effective approach to AI development.

Following the model’s release, their AI Assistant app quickly became the top free app on the U.S. Apple App Store, surpassing competitors like ChatGPT. This rapid adoption indicates significant user interest, which could translate into substantial future revenue streams.

As of now, their primary revenue comes from offering developer access to its models. The company charges $2.19 per million output tokens, a competitive rate compared to OpenAI’s $60 for a similar service. While specific revenue figures are not publicly disclosed, analysts estimate that with around 3 million paying users, their valuation could reach at least $1 billion. (Forbes)

Deep Thoughts: Why DeepSeek’s AI Revolution is Just Getting Started

DeepSeek is an artificial intelligence startup that is reshaping the AI industry with powerful, cost-effective models. Unlike many AI companies that spend billions on training large models, DeepSeek has proven that AI development can be efficient and scalable. It focuses on natural language processing (NLP), coding assistance, mathematics, and multimodal AI.

At its core, DeepSeek builds large language models (LLMs) that rival OpenAI’s GPT-4 and Google’s Gemini models. Its flagship model, DeepSeek-V3, is a Mixture-of-Experts (MoE) model with 671 billion parameters, but it activates only 37 billion parameters per token, making it cheaper and faster to run. This approach allows DeepSeek to deliver high performance at a fraction of the cost.

Beyond language models, DeepSeek has also developed DeepSeek-Coder, an AI designed for code generation, debugging, and programming assistance. It competes with models like GitHub Copilot and OpenAI Codex, offering developers a cost-effective AI companion for software development. DeepSeek also released DeepSeek-Math, which specializes in solving complex mathematical problems with step-by-step reasoning. If you liked this deep dive, you’ll love more stories on Venture Kites. We cover startups, tech disruptions, and big business moves. Check out other articles and stay ahead of the game.

At a Glance with DORK Company

Dive In with Venture Kites

Lessons From Deepseek

Bet on a Bigger Future

The Lesson & Why It Matters: Great startups don’t just solve today’s problems—they position themselves for the next 10 years.

Implementation: Look at where the industry is heading. Build for what customers will need, not just what they need today.

How DeepSeek Implements It: DeepSeek’s models focus on efficiency, preparing for a future where AI must be scalable, affordable, and widely accessible.

Challenge the Narrative

The Lesson & Why It Matters: If people believe something is impossible, prove them wrong. The best startups break industry assumptions.

Implementation: Don’t accept industry rules as fact. Question why things are done a certain way and explore better alternatives.

How DeepSeek Implements It: Everyone assumed large AI models required billions in investment. DeepSeek proved that wrong with low-cost, high-performance AI.

Ignore the Hype, Focus on Fundamentals

The Lesson & Why It Matters: Many tech companies chase the latest trends without a long-term plan. Startups that focus on strong fundamentals last longer.

Implementation: Avoid building tech just because it’s trendy. Solve problems that will still exist 5–10 years from now.

How DeepSeek Implements It: While many AI startups focused on flashy features, DeepSeek worked on making AI models scalable, cost-effective, and powerful.

Use Unused Opportunities in Big Markets

The Lesson & Why It Matters: Startups often copy existing ideas instead of looking for untapped opportunities. The best companies find new gaps to fill.

Implementation: Look for blind spots in big industries. Ask, “Where are customers unhappy?” and fix that problem before anyone else does.

How DeepSeek Implements It: DeepSeek realized that AI models were too expensive. Instead of competing directly on size, they focused on cost-efficient AI—a need that no one else had addressed yet.

Being Underrated Can Be an Advantage

The Lesson & Why It Matters: Sometimes, flying under the radar helps you innovate without pressure.

Implementation: Instead of chasing media attention, focus on execution. Let results speak for themselves.

How DeepSeek Implements It: Before its AI assistant became the most downloaded app in the U.S., DeepSeek operated with little media coverage.

Youtube Shorts

Author Details

Creative Head – Mrs. Shemi K Kandoth

Content By Dork Company

Art & Designs By Dork Company