Moreh Inc : Scaling AI Without Breaking a Sweat

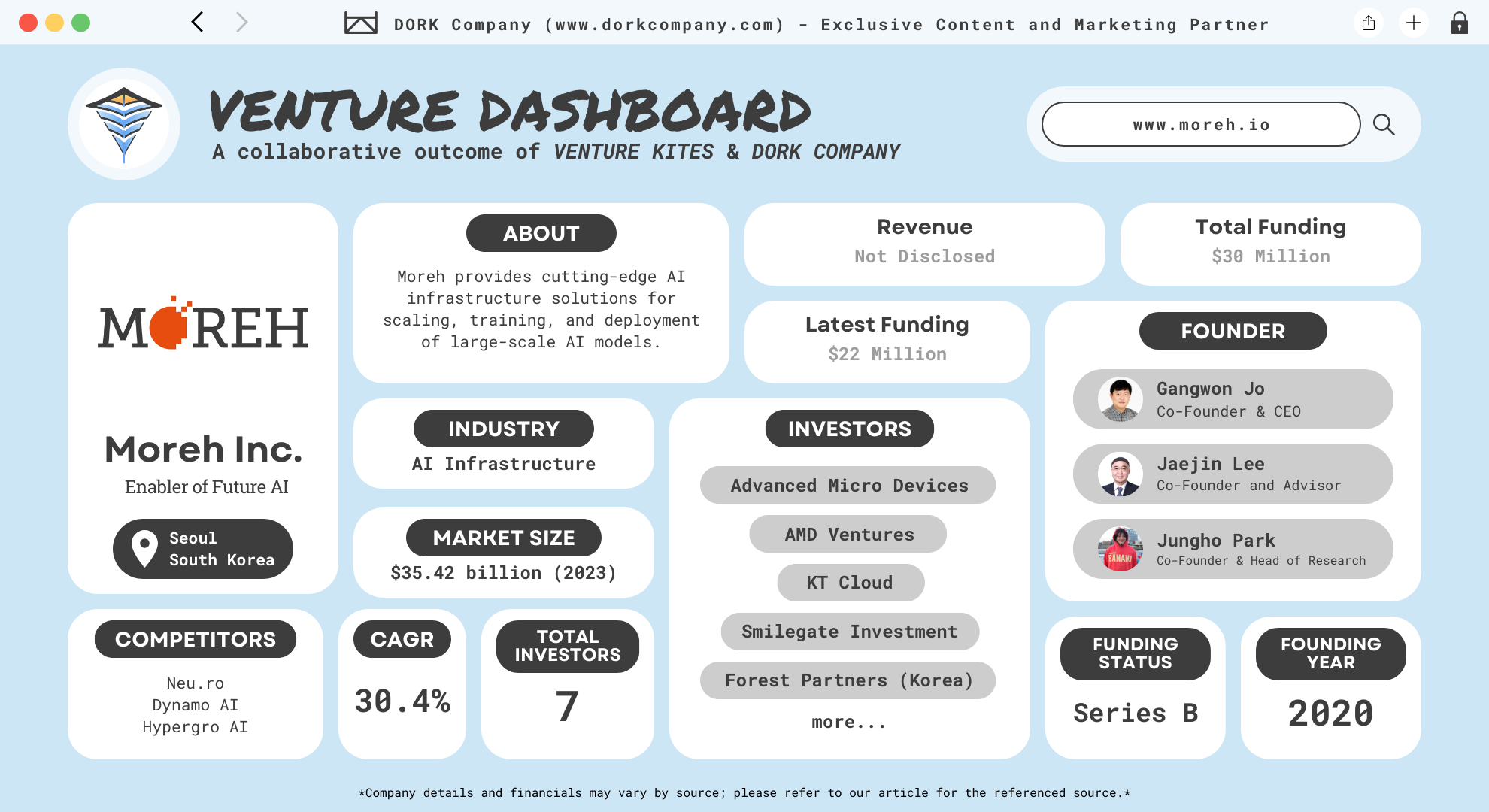

Moreh Inc. is a technology company specializing in AI hardware and software solutions designed to accelerate machine learning and deep learning processes. The company focuses on developing advanced AI computing platforms that offer high performance, efficiency, and scalability, catering to industries like autonomous vehicles, robotics, and large-scale data centers.

Founded in 2020, Moreh is addressing the complexities of AI scalability. The company’s primary product, the MoAI Platform, helps organizations train, fine-tune, and deploy massive AI models, like large language models (LLMs), using high-performance infrastructure. These models require immense computing power, which is where Moreh’s expertise comes into play. (Moreh)

Based in South Korea and Silicon Valley, Moreh aims to solve one of AI’s biggest challenges—making hyperscale AI infrastructure accessible. Their platform allows enterprises to scale AI models across diverse hardware environments, like GPUs and NPUs, without hitting performance roadblocks. In simple terms, if you have a massive AI model to train, Moreh makes sure it can run smoothly, no matter the size. With backing from major tech players like AMD and KT Cloud, Moreh has positioned itself as a critical enabler in the AI ecosystem.

Meet the Minds Behind Moreh : Moreh’s High-Performance Trio

Moreh Inc. was founded in 2020 by a team of highly experienced computer scientists with deep roots in AI infrastructure and high-performance computing. The founding members—Gangwon Jo, Jungho Park, and Jaejin Lee—brought together years of expertise in GPU-accelerated computing and software optimization, aiming to solve one of AI’s most pressing issues: scalable infrastructure.

Gangwon Jo (CEO and Co-Founder)

Gangwon Jo, the CEO and the driving force behind Moreh, has a Ph.D. in Electrical Engineering and Computer Science from Seoul National University, where he also completed his undergraduate studies with highest honors. His research focused on compilers and runtime systems for AI accelerators. He spearheaded the development of Moreh’s flagship MoAI platform, which offers infrastructure solutions that make hyperscale AI more accessible and efficient. (Gangwon Jo)

Jungho Park (Head of Research and Co-Founder)

Jungho Park, Moreh’s Head of Research, has more than a decade of experience in GPU-accelerated computing. He holds a Ph.D. in the same field from Seoul National University and is also the CEO and co-founder of ManyCoreSoft, another startup that focuses on high-performance computing solutions. His role at Moreh involves designing and implementing infrastructure solutions for large-scale AI, ensuring that companies can efficiently deploy AI models at scale. (Jungho Park)

Jaejin Lee (Co-Founder and Advisor)

Jaejin Lee, a co-founder and advisor at Moreh, is a professor at Seoul National University and the dean of its Graduate School of Data Science. He is an IEEE fellow and an expert in heterogeneous supercomputing. Lee’s involvement adds an academic depth to the company, as he leads the research group responsible for groundbreaking work in supercomputers and their software.

The Founding Story

The founders came together to tackle the growing challenge of running large AI models, like GPT and other LLMs, on efficient and scalable infrastructure. They recognized the limitations in existing AI infrastructure—particularly with scaling models across multiple GPUs—and developed the MoAI platform to solve these issues. Their goal was to bridge the gap between AI applications and the underlying hardware, allowing businesses to leverage hyperscale AI without worrying about the technical complexities.

AI Scaling Wars: May the Infrastructure Be With You

The AI infrastructure market is booming, and it’s no longer just hype—it’s a goldmine for businesses. In fact, the market was at $35.42 billion in 2023 and is expected to hit a staggering $223.45 billion by 2030, growing at a 30.4% CAGR. With the rise of large language models (LLMs) like GPT and widespread AI adoption, companies are scrambling to invest in infrastructure capable of handling these computational behemoths. (Grand View Research)

What is AI Infrastructure?

At its core, AI infrastructure refers to the technology stack—hardware, software, and networking—needed to develop, train, and deploy AI models. This includes specialized hardware like GPUs and TPUs, robust software frameworks like TensorFlow and PyTorch, and scalable networking solutions.

Why is it Booming?

The key driver is the surge in AI applications like machine learning, deep learning, and generative AI. Businesses need more powerful, flexible systems to process complex algorithms and manage vast amounts of data efficiently. For example, cloud service providers (CSPs) like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure dominate the market with flexible, scalable AI solutions. These cloud platforms allow companies to offload the heavy lifting, eliminating the need for expensive on-premise hardware.

Who’s Leading the Charge?

Big players like Nvidia, IBM, and Microsoft are setting the pace, focusing on hybrid AI solutions that allow companies to switch between cloud and on-premise setups seamlessly. Innovation is driving competition, with companies heavily investing in R&D to stay ahead.

Scaling Dreams: Moreh’s Mission to Power AI at Every Level

Moreh Inc’s mission is simple : to create a full-stack AI infrastructure platform that optimizes the entire lifecycle of hyperscale AI, from model development to deployment. They aim to make AI technology accessible to a wider audience, leveling the playing field for businesses across industries.

Their vision is to empower companies to utilize AI at scale without being limited by infrastructure issues. Moreh focuses on making hyperscale AI affordable and efficient, offering solutions that enable organizations to run large language models (LLMs) and other complex AI systems seamlessly.

The Problems They Solve

Moreh tackles the infrastructure bottlenecks that slow down AI progress. Companies struggle with issues like cluster scalability, performance portability, and the orchestration of diverse hardware accelerators (GPUs, NPUs, etc.). Moreh’s MoAI platform solves these challenges, allowing AI models to run smoothly across different hardware configurations.

Business Model

Moreh operates on a B2B model, offering its MoAI platform to enterprises and cloud service providers. By enabling businesses to scale their AI infrastructure without heavy upfront investments, they provide flexibility in how companies approach AI. Their platform is also compatible with popular AI frameworks like PyTorch and TensorFlow, making it easy for businesses to transition.

A Stack Above: Moreh’s MoAI Platform and Beyond

When it comes to AI infrastructure, Moreh Inc. doesn’t just meet the bar; they set it higher. Their suite of products and services is designed to simplify AI at scale, allowing businesses to unlock the true potential of AI without the typical headaches.

MoAI Platform

The MoAI platform is the backbone of Moreh’s offerings. It is a full-stack AI infrastructure software built to optimize large-scale AI models, especially for those working with large language models (LLMs) and multimodal AI. The platform is compatible with popular AI frameworks like PyTorch and TensorFlow, making it accessible to a wide range of users.

This platform stands out for its GPU virtualization capabilities. This feature allows businesses to allocate resources dynamically and scale across multiple GPUs seamlessly, without performance loss. The MoAI platform also supports a variety of hardware accelerators, including AMD GPUs, making it versatile for different AI workloads.

Fine-tuning and Training Large Models

One of Moreh’s key offerings is its ability to fine-tune and train massive models. In a recent project, Moreh fine-tuned Llama 3.1, a model with 405 billion parameters, on 192 AMD GPUs. They also trained the largest Korean language model with 221 billion parameters, showcasing the platform’s power for LLM tasks.

Heterogeneous Accelerator Support

Moreh’s platform isn’t limited to NVIDIA GPUs. It’s designed to run efficiently on non-NVIDIA GPUs, NPUs, and other accelerators, offering businesses flexibility to use the most cost-effective hardware without worrying about software compatibility.

Distributed Runtime System

The MoAI platform provides a distributed runtime system that ensures efficient parallelization of AI models across different hardware nodes. This feature simplifies the process of managing and orchestrating large-scale AI models, making it easier to train them in diverse environments.

Behind the Curtain: How Moreh Makes AI Magic Happen

Moreh Inc. is all about simplifying the complex world of AI infrastructure. Their MoAI Platform is designed to make running large-scale AI models easy and efficient for businesses, even those with limited infrastructure experience.

MoAI: The Heart of Moreh’s Innovation

At the core of Moreh’s technology is their MoAI platform, a full-stack infrastructure designed to handle the complex demands of modern AI models. It’s like the ultimate toolkit for AI engineers, supporting major frameworks like PyTorch and TensorFlow. What sets MoAI apart is its ability to optimize large AI workloads, making efficient use of GPU resources across clusters of processors. This means businesses can scale their AI operations seamlessly, whether they’re training a model on a handful of GPUs or thousands.

Outperforming the Competition

Moreh has made headlines by outpacing traditional AI heavyweights. In a recent test, Moreh’s LLM (large language model) training outperformed NVIDIA’s hardware on several key metrics, thanks to their partnership with AMD. By leveraging AMD’s MI250 GPUs and their innovative software stack, Moreh has achieved breakthroughs in AI model efficiency and speed.

Fine-Tuned for Success

The MoAI platform also excels in GPU virtualization, allowing fine-tuned control over resource allocation. This flexibility ensures that businesses can scale their operations efficiently, only using what they need when they need it. Whether it’s a small model or a 221 billion parameter LLM, MoAI’s platform handles the load with ease.

Dream Work : How Moreh is Reshaping the AI Market

Backed by major industry players like AMD and KT Cloud, Moreh has become a trailblazer in providing scalable AI solutions. By integrating multiple accelerators like GPUs and NPUs into their infrastructure, they’re helping enterprises transition to more efficient, scalable AI solutions. The successful training of a 221-billion-parameter large language model (LLM) is just one of many examples that demonstrate the platform’s power.

They also topped the Hugging Face LLM performance assessment in early 2024, placing them among the world’s top AI companies in terms of model performance and scalability.

AMD Partnership

Moreh’s collaboration with AMD has been a game-changer. AMD’s high-performance GPUs are integrated with Moreh’s MoAI Platform, helping the company achieve impressive results in AI model training and scaling. This partnership allows Moreh to leverage AMD’s Instinct MI250 accelerators, creating an AI platform architecture that competes directly with NVIDIA’s solutions, which currently dominate the market. This partnership was crucial in Moreh’s success in training one of the largest Korean language models with 221 billion parameters.

KT Cloud Collaboration

KT Cloud, a leading cloud service provider in South Korea, has also heavily invested in Moreh. In 2023, KT and KT Cloud poured 15 billion won ($11.65 million) into Moreh to support its AI software development and to strengthen South Korea’s domestic AI infrastructure. The collaboration with KT helps Moreh reduce reliance on foreign solutions, particularly in the AI GPU market. By integrating Moreh’s AI infrastructure software with KT’s cloud capabilities, the two are working towards building a fully domestic AI solution for South Korea, increasing the country’s AI competitiveness.

Fueling the Future: The Millions Behind Moreh’s Success

Moreh Inc. is making waves in the AI industry, backed by impressive funding rounds. In 2023, the company secured a $22 million Series B round, with key investors like AMD and KT Cloud. This recent injection of capital brings their total funding to $30 million, positioning them as a strong player in the AI infrastructure space. The funds raised will be used for further development of their flagship MoAI platform, which aims to optimize hyperscale AI applications and infrastructure. (Tracxn)

In July 2023, Moreh closed a Series B funding round, raising an impressive $22 million. This round was supported by both institutional and corporate investors, with AMD and KT Cloud leading the charge. Notable institutional investors also included Smilegate Investment and Forest Partners, who have shown confidence in Moreh’s ability to redefine AI infrastructure.

Moreh to Come: The Future of AI Infrastructure Looks Bright

Moreh Inc. is setting a new standard in AI infrastructure. From their powerful MoAI Platform to partnerships with industry leaders like AMD and KT Cloud, they are solving real problems in scaling AI models. By providing seamless infrastructure solutions for large language models, Moreh is helping companies run their AI workloads efficiently and cost-effectively.

Their significant funding rounds, including the $22 million Series B, demonstrate investor confidence in their technology and vision. As Moreh continues to expand, their contributions will likely reshape the AI landscape. They are already showing they can handle the world’s largest AI models, with their success in training a 221-billion parameter model being a testament to their expertise.

Ready to turn your bold ideas into reality? Moreh Inc. shows that with the right technology groundbreaking innovation is within reach. From leading in the AI infrastructure market to securing major funding, Moreh is setting the pace for the future of AI. Their journey highlights the importance of scalable, powerful infrastructure in driving AI forward.

If Moreh’s story inspires you, now is the time to act. Start building on your own ideas. Who knows? You could be the next disruptor in your industry. Check out more articles on Venture Kites to dive deep into the world of emerging startups and technologies. There’s always something new to discover!

At a Glance with DORK Company

Dive In with Venture Kites

Lessons From Moreh Inc.

The Power of Specialization

The Lesson & Why it Matters: Focus on a niche that aligns with your strengths. Specializing allows you to deliver targeted, high-quality solutions.

Implementation: Identify an underserved market segment and dominate it with specialized products or services.

How Moreh Implements it: Moreh focuses exclusively on AI infrastructure, helping businesses manage hyperscale AI models. Their deep focus makes them leaders in this niche.

Invest in Research and Development

The Lesson & Why it Matters: Constant innovation keeps you ahead of competitors.

Implementation: Dedicate resources to developing new technologies and improving existing ones.

How Moreh Implements it: With significant R&D investments fueled by their funding, Moreh is continuously enhancing their MoAI Platform to stay ahead in the AI infrastructure space.

Stay Ahead of Market Trends

The Lesson & Why it Matters: Understanding market trends allows you to be proactive rather than reactive.

Implementation: Use market research to anticipate industry shifts and adjust your strategy accordingly.

How Moreh Implements it: Moreh positioned itself in the rapidly growing AI infrastructure market, capitalizing on the increasing demand for hyperscale AI.

Collaborate with Industry Giants

The Lesson & Why it Matters: Strategic partnerships accelerate growth and open doors to new markets.

Implementation: Align with partners that fill gaps in your offerings and provide complementary strengths.

How Moreh Implements it: Moreh partners with AMD and KT Cloud, leveraging their hardware and cloud expertise to scale their infrastructure.

Leverage Domestic Strengths for Global Growth

The Lesson & Why it Matters: Strong domestic roots can serve as a launchpad for global expansion.

Implementation: Build a robust local presence while planning for international expansion.

How Moreh Implements it: Moreh’s partnership with KT Cloud strengthens South Korea’s AI infrastructure, giving them a strong domestic base to expand globally.

Author Details

Creative Head – Mrs. Shemi K Kandoth

Content By Dork Company

Art By Dork Company

Instagram Feed

X (Twitter) Feed

🚀 Moreh is changing the AI game! They’re building the future of AI with cutting-edge solutions that scale faster than ever.

— Venture Kites (@VentureKites) October 20, 2024

Full Story @VentureKiteshttps://t.co/xhPYsiFdWq

Proudly crafted with @dork_company https://t.co/w7BPjtIdM3#AI #Startups #TechNews #VentureKites pic.twitter.com/ArnaBuZCKo